On this page

The analysis and interpretation of results should be robust, unbiased, and anchored around the evaluation questions established by your evaluation plan.

The type of analysis required, and the value it can add to the understanding of the appropriateness, efficiency and/or effectiveness of your program or activity, will depend on your evaluation design and the volume of data you have collected.

It is important to ensure you have the necessary time, skills, knowledge and resources available to understand and analyse the data you have collected.

This can be a technical step, so in some cases it is advisable to seek expert advice to ensure the results are interpreted in a robust and accurate way and findings are reported appropriately to maintain participant privacy.

Things to consider

Do the results meet the objectives of the program?

- What do you need to know?

- What methods will you use to analyse the data?

- Does the evidence answer your key evaluation questions?

- Data needs context – how will you place your data in context?

- What are you comparing?

What is the most appropriate analysis method for your data/evidence and to sufficiently answer your evaluation questions? How will you check for patterns, themes and/or trends in the data?

- How well do you understand your data?

- Are there patterns or themes you can see in the data – (e.g. changes over time or changes compared with a known dataset (or benchmark))?

- Can you code your qualitative data and evidence into different categories? How will you validate and verify your analysis?

- How do you ensure the privacy of participants?

How do you know that this program or activity actually led to (or contributed to) the changes/outcomes? Could something else have worked?

- How does the evidence support your program logic and theory of change?

- Does the evidence point to other interventions that may have had the same (or better) outcomes?

- What are the conclusions based on the evidence and your theory of change?

Analyse and interpret

High quality and robust analysis is fundamental to showing how effective the government is, as it provides the evidence to demonstrate that programs and activities are delivering their intended benefits to Australians.

It also allows the APS values of impartiality, accountability, respect and ethical behaviour to be upheld, and ensures that the people we serve – the government, Parliament, and the public – have trust in our analysis and advice.

Your evaluation plan and stakeholder engagement will determine the appropriate quality of your data and evidence, and the most appropriate methodology to produce the results in the context of relevant risks. If unavoidable time constraints prevent this happening then this should be explicitly acknowledged and reported.

Skills and knowledge

You will need the appropriate mix of skills and knowledge to collect the evidence and analyse it appropriately.

Ideally, there will be an independent quality assurance process in the “Analyse and interpret results” phase of your evaluation. This will form part of your report (see Report findings).

Your analysis, just like your evaluation, needs to be fit for purpose and proportionate to the risks associated with the intended use of the evaluation.

High quality analysis needs to:

- be both rigorous and unbiased

- be repeatable, objective, and done in context with a view to having impact

- have understood and managed uncertainty

- address the key evaluation questions robustly.

All these factors play an important role in developing good quality analysis, and using it appropriately.

Remember that your program or activity is only ever contributing to change – this is true of both positive and negative outcomes. If the program or activity is demonstrating poor outcomes, both internal and external factors should be analysed in order to explain them.

Stakeholders

It’s also good practice to check in with your key stakeholders during the analysis phase to verify their reporting requirements (when and in what form they would like to see the results), as well as to alert them to any potential issues with the evaluation.

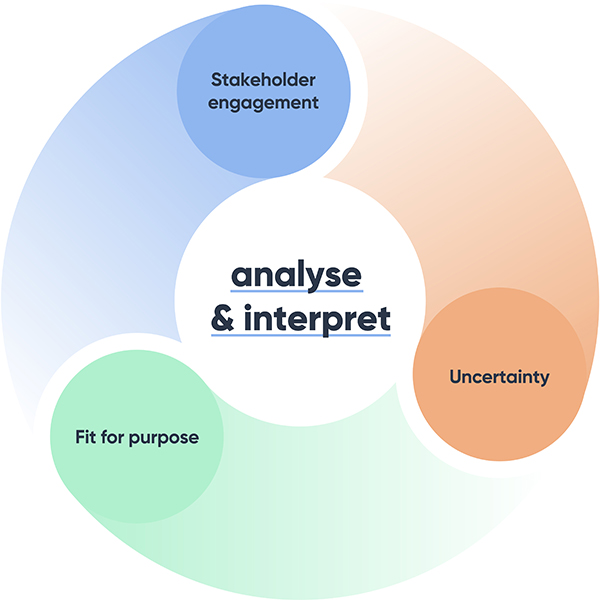

The figure below outlines the various aspects of analysis that you could keep in mind.

Adapted from The Aqua Book: guidance on producing quality analysis for government (UK Treasury) [PDF 1.0MB] page 24.

There is usually a trade‑off between the available resources and time for the analysis, and the level of quality assurance that can be completed. With any analysis in an evaluation frame, the competing aspects of the evaluation need to be considered.

You can find more information and examples under Templates, tools and resources that will help you ensure that your analysis produces credible and trustworthy results.