On this page

No one-size fits all approach

There is no one‑size‑fits‑all approach to monitoring and evaluating a Commonwealth program or activity – you need to develop performance monitoring and evaluation approaches that are appropriate to your entity's purposes and key activities.

Evaluative approaches can be used to support, complement or enhance the regular and ongoing performance monitoring and reporting of activities and programs by managers against expected results.

It is important to clarify upfront if you need an evaluation, and if so, to be clear about its purpose and audience, targeted in sourcing the right expertise, skills and knowledge, and constructive in the way you plan to use the results.

Things to consider

Is there a common understanding in your entity of what needs to be evaluated and why?

- Is there a government directive to do an evaluation?

- Is the activity or program significant in achieving government objectives?

- Has existing performance information suggested an area where improvement is necessary?

- Has there been a substantive change proposed to the design of the activity or program?

Have key stakeholders agreed on the objectives of the proposed evaluation?

- Who are the key stakeholders for the proposed evaluation?

- What are their interests and expectations?

- Have the information needs of stakeholders and the intended use of the evaluation been documented and defined?

- Have the interests of all stakeholder groups been considered, including those with needs and views that may not be shared with the wider population?

- Have stakeholders been engaged to review the evaluation objectives and develop specific and measurable evaluation questions and data collection methodologies?

How will the evaluation help my entity to achieve its purpose/s?

- Is there a theory of change or logic model that helps to describe how the activity or program is supposed to work (that is, what you plan to achieve and how you measure success)?

- How is this activity or program expected to contribute to the achievement of my entities’ purpose/s?

Select evaluation focus

Under the Commonwealth Performance Framework, there are ongoing requirements to measure, assess and report on the performance of government activities and programs.

Evaluations are one way of generating evidence to support decision‑making, accountability and learning, and to meet performance reporting obligations under the Public Governance, Performance and Accountability Act 2013 and associated instruments.

Taking time in the early stages of planning an evaluation to develop a common understanding of what needs to be evaluated, and why, is critically important.

Establishing clear evaluation objectives upfront, and identifying the key evaluation questions that need to be addressed, means your evaluation is more likely to:

- be better focussed

- deliver the information needed to fulfil its purpose

- be sensitive to any cultural, privacy or ethical issues.

Key dimensions

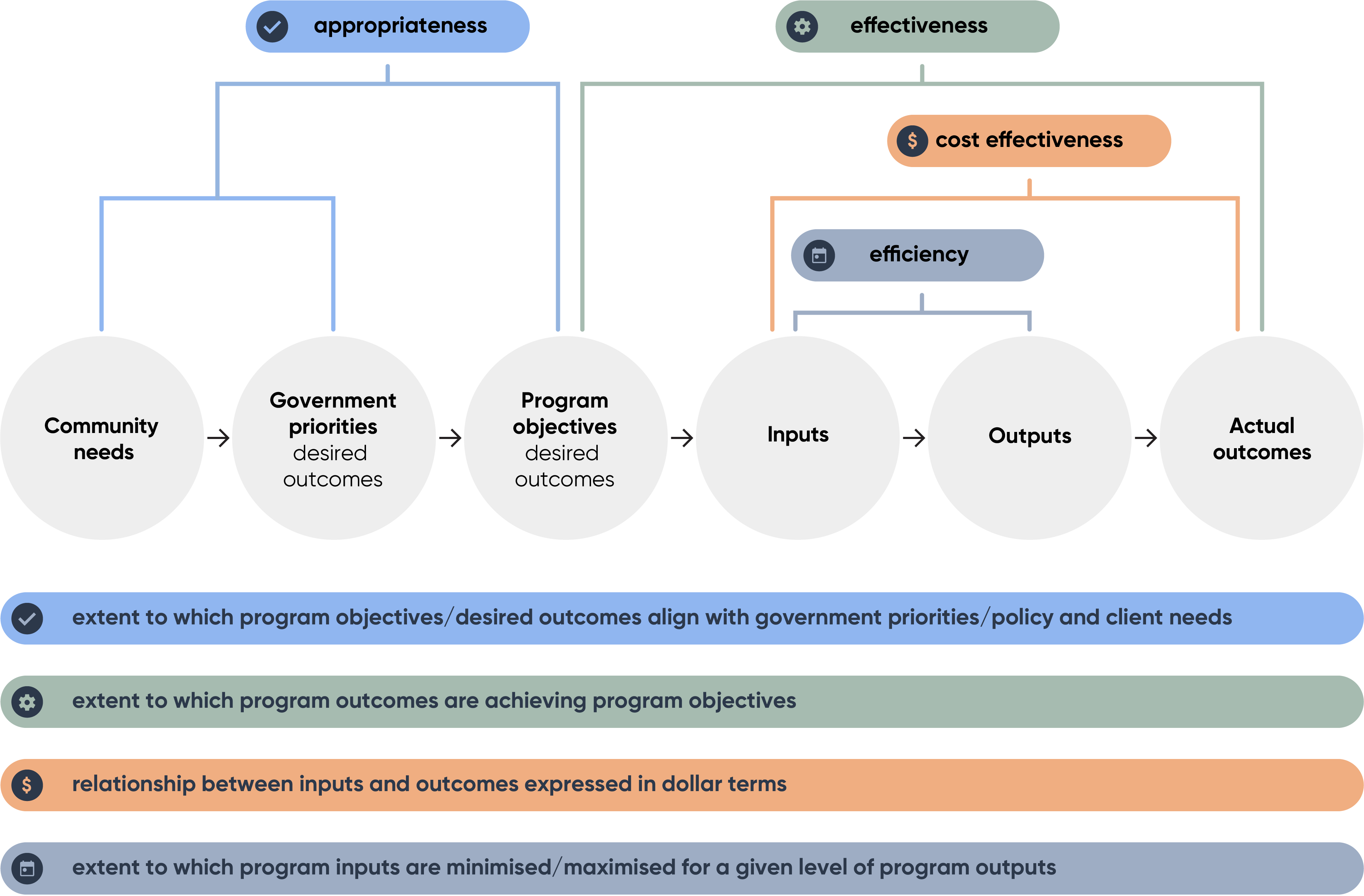

Evaluations often focus on assessing the appropriateness, efficiency and/or effectiveness (including “cost‑effectiveness”) of a specific government activity, policy or program.

- These concepts relate to different elements of the activity/program structure, and to each other, in terms of the:

- policy basis

- program objectives

- inputs

- outputs

- actual outcomes

- results or benefits achieved.

- Determining whether some, or all, of these concepts will be evaluated for a specific government activity, policy or program will help you to define the scope of an evaluation.

The relationship between these concepts is presented below.

Organising key evaluation questions under these categories, allows an assessment of the degree to which a particular program/activity is appropriate, effective and/or efficient in particular circumstances.

Appropriateness

An appropriate program or activity is one where:

- there is an identified need

- the government decides that addressing this need is consistent with its overall objectives

- the priority given to addressing this need is higher than that accorded to competing needs in the allocation of scarce government resources

- the strategy adopted to address the need is likely to be successful (that is, the program logic is sound and suited to the targeted cohort).

Before implementation

The assessment of appropriateness is initially undertaken before a program or activity is implemented, and can be revisited periodically during the life of the program or activity. This will be important where the program or activity has been in operation for some time, or when there are changes in the political, economic and/or social contexts of the program.

As appropriateness is about comparing options (addressing which need has greater priority, and which strategy is likely to be most effective) it is a relative concept. Over time, the appropriateness of a program or activity may increase or diminish.

Efficiency

Efficiency is generally measured as the price of producing a unit of output, and is generally expressed as a ratio of inputs to outputs.

A process is efficient where the production cost is minimised for a certain quality of output, or outputs are maximised for a given volume of input.

In the Commonwealth, efficiency is generally about obtaining the most benefit from available resources. That is, minimising inputs used to deliver the policy or other outputs in terms of quality, quantity and timing.

Efficiency evaluations therefore focus on:

- inputs: the resources used (financial, staff, technology/equipment, and so on)

- processes (activities/strategies/operations): used to produce the outputs of the program/activity

- outputs: the tangible deliverables/products/items delivered by the program or activity, over which management has direct control (for example, the number of training courses delivered, income support payments issued, grants funded, ministerial letters written, publications disseminated, applications processed, and so on).

As efficiency is about “doing it better” or “getting more bang for the buck” it is a relative, rather than absolute concept.

It is not possible to say a program is efficient, only that it is more, or less, efficient than say, this time last year or compared to other regional locations or to similar programs overseas (and the basis of such claims would need to be carefully considered).

Measuring efficiency

Measuring efficiency involves making comparisons, and this will be need to be reflected in the evaluation design and data collection and analyses strategies. For more information see Determine scope and approach and Define evidence and data sources.

Effectiveness

Effectiveness is defined as the extent to which a program’s outcomes achieve its stated objectives.

Effectiveness is also concerned with:

- measuring the factors affecting achievement of outcomes

- establishing cause‑effect interpretations as to whether the outcomes were caused by the program or activity, or external factors

- identifying whether there are unanticipated outcomes which are contributing to the achievement of objectives or impacting negatively on clients.

Accountability

Accountability in the Commonwealth focuses on the setting of objectives, producing and reporting on outcomes, and the visible consequences of getting things right or wrong. Hence, evaluating effectiveness is explicitly about being accountable for your program or activity.

Effectiveness evaluations can only be undertaken when it is reasonable to expect that outcomes should be achieved, which may be later in the life of a program or activity or even after it has been completed. This will depend on the stages in the policy cycle and how long a program or activity takes to show results (that is, to fully demonstrate the achievement of outcomes).

Challenges

Evaluating effectiveness can be particularly challenging because of the need to tease out whether the outcomes have been caused by the program or activity or by other factors. It also requires the development of a counterfactual (that is, what would have happened to the program or activity beneficiaries in the absence of the program or activity?).

Impact evaluations

Effectiveness evaluations that develop a counterfactual are called ‘impact evaluations’. These evaluations helps us answer a specific, but important, question about government programs and policies.

Questions about program impact are causal in nature, that is, we are asking if the program caused the change in outcomes. The field of causal inference gives us a set of tools that we can use to help answer these kinds of questions.

For detailed resources see Impact evaluations.

Consider the logic underpinning an evaluation

Theory of change and logic models are often used to describe how and why a desired change is expected to happen in a particular context – in doing so, they are a tool that helps you link what you want to achieve with how you will measure success.

A logic model is typically a step‑by‑step diagram that details inputs (for example, money, staff, resources) needed to deliver your activities and how they should lead to short, medium and long‑term outcomes.

Long term

It is useful to think of longer term outcomes as wider long‑lasting social change that your program or activity is contributing to. In this way, logic model outcomes vary in terms of how much influence your program or activity has over them, and in turn, how accountable your program or activity is for achieving them.

Scale

Logic models can be used to help determine the scale, focus and objectives of a particular evaluation based on the value, impact and risk profile of an activity or program.

While a number of outcomes for a program or activity may be identified through a logic model, evaluation takes time and resources so it is generally best to evaluate a few outcomes rather than all of them.

To make the evaluation fit for purpose, you may also have to narrowly define your chosen outcomes to ensure that they can be measured appropriately.