On this page

Proportional evaluation effort

The appropriate time and evaluation approach required to support continuous improvement, accountability and decision‑making needs to be determined on a case‑by‑case basis to ensure the overall approach is fit for purpose.

Formal evaluation not always required

It is not feasible, cost effective or appropriate to conduct a formal evaluation of all government activities and programs.

Cost balanced against risk

The cost of evaluation must be balanced against the risk of not evaluating.

Noting that sometimes performance monitoring by itself will be sufficient to meet the performance reporting requirements under the Public Governance, Performance and Accountability Act 2013.

In some cases, well‑designed data collection and performance monitoring established during the design phase of a new or amended program or activity can help to refine it over time.

In other cases, evaluation will need to be more comprehensive to assess whether an activity or program is appropriate, effective and/or efficient.

Strategic, risk-based approach

A strategic, risk‑based approach can help to determine when and how evaluations are selected, prioritised and scaled based on the value, impact and risk profile of a particular government activity or program.

Taking a strategic, risk‑based approach can help to:

- identify and prioritise when an evaluation is required to complement or enhance routine performance monitoring and reporting

- determine which type of evaluation is most appropriate

- identify what key questions need to be addressed in an evaluation

- incorporate the right level of evaluation planning at the initial program design stage to ensure there is an appropriate evidence base for future evaluations or reviews

- collect the required data for monitoring and evaluation throughout program implementation and align this to existing data collections where possible.

Identify and prioritise evaluation effort

What to evaluate, when to evaluate, and the type of evaluation needed in a particular circumstance depends on a range of factors.

The topic, scope and size of each proposed evaluation will vary, so these issues will not apply in the same way or have the same relative importance in all evaluation decisions.

Things to consider

| Factors | Key questions |

|---|---|

| Government or entity priority | Is the activity, program or evaluation topic a government initiative or directly connected to a government priority or an entities’ objective? Is there a Cabinet or ministerial directive to undertake a comprehensive evaluation? Is there a Ministerial Statement of Expectations? |

| Stakeholder priority | Does the activity, program or evaluation topic relate to a priority issue for the sector or other key stakeholders? Does the activity or program have an important relationship to other program areas? |

| Monitoring, review and stakeholder feedback | Have issues with the activity or program objectives, implementation or outcomes been identified through monitoring, review or stakeholder feedback? Does performance information suggest areas where improvements are required? Has termination, expansion, extension or change in the activity or program been proposed? |

| High profile, sensitivity or cost | Does the activity, program or evaluation topic have a high profile, high sensitivity or a high cost? Has it attracted significant public attention or criticism? |

| Evaluation commitment | Is there a commitment to the evaluation in a new policy proposal, an Australian Government budget process or a public statement? Are there any sunsetting legislative instruments or Policy Impact Analysis requirements that apply? |

| Previous evaluations | Has the activity, program or topic been evaluated previously? This may help determine if a new evaluation is worthwhile, particularly if:

|

| Other relevant activity | Does the evaluation overlap with or duplicate relevant monitoring, review or audit activity? |

| Internal/external | Would the evaluation be most appropriately conducted internally, by external providers or a combination of both? |

| Timeframes | When should the evaluation be delivered to best inform decision making? |

| Resources and funding | What are the expected duration, costs and resource requirements? |

| Data and information sources | What relevant data and information sources are available? What new sources may be required? |

| Impact | What are the expected impacts for an entities’ activities, programs, policies, processes and stakeholders? |

| Risk – conducting evaluation | What are the potential risks associated with conducting the evaluation? |

| Risk – not conducting evaluation | What are the potential risks associated with not conducting the evaluation? |

| Other considerations | Are there other factors specific to the evaluation that need to be considered? |

Different evaluation types

Different types of evaluations draw on a range of methods and tools to measure and assess performance.

These approaches can be organised in different ways, with common distinctions made between formative and summative evaluations.

Formative evaluation

Questions about the level of need, policy design and implementation/process improvements are usually best answered during the early design and implementation/delivery.

Summative evaluation

Questions about program outcomes and impacts are usually best answered near or at the end of the policy or program. Or after it has matured.

Rapid approaches

Rapid approaches are also used in the public sector to meet the information needs of decision‑makers in uncertain, resource‑constrained environments.

Select fit for purpose tools and approaches

It is important to select tools and approaches that are fit for purpose based on the specific program or activity and the purpose of the evaluation.

What type of evaluation is best depends on a combination of:

- the stage and maturity of the program or activity

- the issue or question being investigated

- what data or information is already available

- the timing of when evaluation findings are required to support continuous improvement, accountability or decision-making.

For information about things to consider at each stage of planning and conducting an evaluation, see How to evaluate.

Evaluation at different stages of the policy cycle

Evaluations can be used to support continuous improvement, risk management, accountability and decision‑making at various stages in the policy cycle.

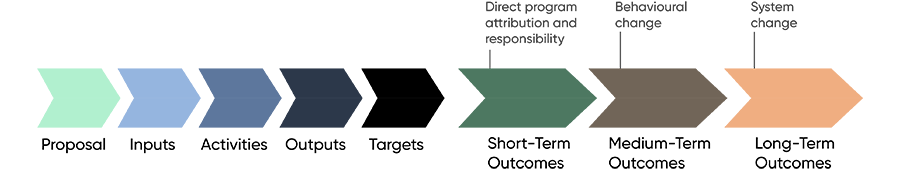

Program logic

One way of doing this is through a program logic model.

The purpose of a program logic model is to show how a program works and to draw out the relationships between resources, activities and outcomes.

While this program logic is presented in a linear fashion, in practice there will be continual feedback loops where evaluation findings and performance information help to inform continuous improvements in policy development and program design.

Broadly, the stages when an evaluation is conducted include:

- before a program or activity is implemented (that is, in the early design phase)

- during the implementation and/or ongoing delivery phase of a program or activity

- after a program or activity has been in operation for some time

- rapid evaluation of a program or activity to inform urgent decision‑making.

Before implementation

- What is the problem?

- To what extent is the need being met?

- What can be done to address this need?

- What is the current state? Will the proposed intervention work?

During implementation

- Is it working?

- Is the program or activity operating as planned?

- What can be learned?

After a program is in operation for some time

- Did it work?

- Is anyone better off?

- Is the program or activity achieving its objectives?

- What has the impact been?

- What are the benefits relative to the costs?

Rapid evaluation to inform urgent decision‑making

- What evidence is available?

- What action is required?

- What caveats do I need to put on the findings?