On this page

This guide is designed to support Commonwealth officials involved in developing New Policy Proposals (NPPs) to:

- plan how a policy, program or activity will be monitored and evaluated from the beginning, so the necessary performance information and data is collected during implementation

- consider and determine fit for purpose evaluation and performance monitoring approaches for specific government programs and activities delivered in specific contexts

- ensure robust evaluation planning underpins all proposals considered in the Budget and Cabinet processes, in accordance with the Commonwealth Evaluation Policy, the Cabinet Handbook and the Budget Process Operational Rules.

This guide complements the templates, tools and resources.

Good practice

The Commonwealth Evaluation Policy emphasises the importance of:

- establishing fit-for-purpose performance monitoring and evaluation arrangements early in the policy cycle

- using strategic, risk-based approaches to identify, prioritise and schedule evaluation activities.

Planning how a new or amended program or activity will be monitored and evaluated before implementation commences is good practice. This allows:

- robust performance monitoring to be established early in the policy cycle, and aligned to existing data, where possible

- baseline data and information to be established from the outset so that changes can be measured and assessed over time

- credible data and evidence to be collected throughout implementation to support future evaluations, reviews and performance assessments.

Key considerations

For all NPPs, it is important to consider the following:

- Is there robust supporting evidence, or previous evaluation findings available to support the NPP?

- Is baseline data available that will allow progress and changes to be measured over time? If not, is there a plan to create/establish baseline data in the early stages of implementation? (tools to assist in this step see Templates, tools and resources)

- Is there an evaluation plan for how the proposal will be measured and assessed? How will progress and success towards delivering the outcome be monitored and evaluated?

- Are there existing frameworks, standards or benchmarks that the NPP will be assessed against (for example, international benchmarks, existing longitudinal data sets)?

- Can existing administrative data be used to evaluate the outcomes and impacts of this NPP?

- If necessary, have evaluation resources been considered as part of the costing process?

Develop a fit for purpose evaluation plan

An evaluation plan clarifies and documents how you:

- will monitor and evaluate a policy or program over time

- intend to use performance insights generated from evaluation and performance monitoring activities to support decision-making and continuous improvement.

There is no one-size-fits all approach – it is important to select tools, templates and approaches that are fit for purpose based on the scale, scope, design and purpose of a specific policy or program, delivered in a specific context.

Integrated approach

The Commonwealth Evaluation Policy emphasises the benefits of an integrated approach that aligns internal review, assurance, evaluation and performance monitoring activities with external requirements, such as reporting requirements under legislation.

Clarify evaluation questions upfront

The type of evaluation plan required will depend on what decision-makers want to learn about the proposal, policy or program, and how easy it is to define and measure outcomes. For example, in some cases benefits realisation may be all that is required to report on a project’s performance and achievements. In other cases, an evaluation may be required if there is a case to provide robust evidence against hard to measure outcomes.

All proposals must be fit to evaluate

Irrespective of whether a formal evaluation is deemed necessary at the point of a government decision, all proposals, policies and programs must be “fit to evaluate”. This means you need:

- clearly articulated and measurable objectives and outcomes

- robust performance monitoring to support future evaluations, if needed.

A fit for purpose evaluation plan should ensure robust data and evidence is collected throughout implementation to allow the effectiveness and efficiency of programs to be measured and assessed over time.

For an evaluation plan template see Templates, tools and resources.

Note: it is not mandatory to use this template

Start with the end in mind

- What are the benefits and expected outcomes of the NPP?

- Are the intended outcomes and impacts clearly articulated and measurable?

- When are the expected benefits likely to be realised?

- Will credible data and evidence be collected throughout implementation to support future evaluations, reviews and performance assessments?

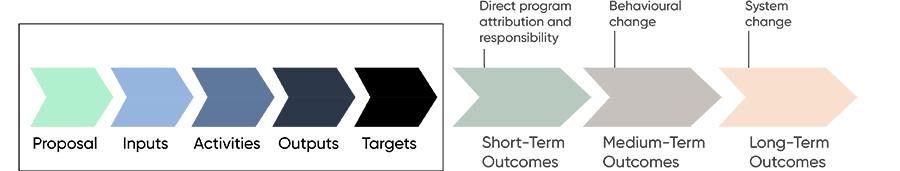

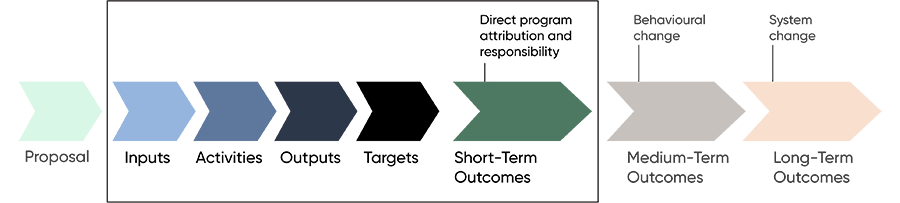

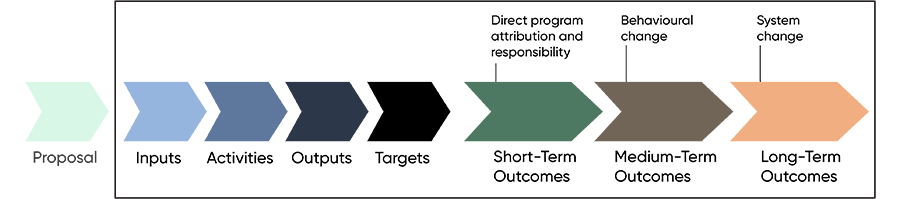

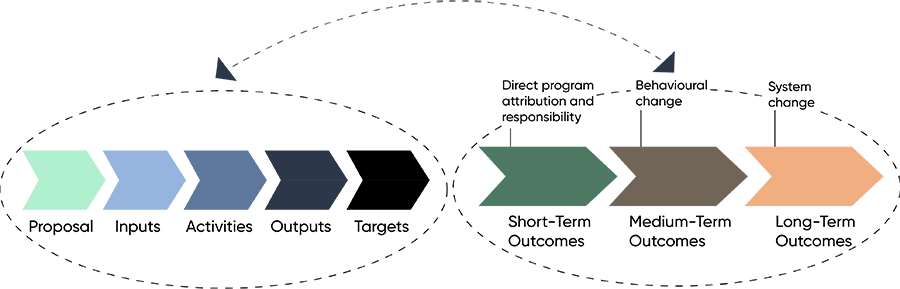

- Are short, medium and long-term outcomes identified? Can these be measured over time?

Test assumptions

- Is there a good program logic, and theory of change?

- Theory of change and logic models are used to plan how and why a desired change will occur, helping you link what you want to achieve with how you will measure success (templates and tools to help you develop a program logic and/or theory of change are available at Templates, tools and resources).

- Are any comebacks for future review/evaluation/critical decisions consistent with the timing of expected changes based on the program logic (that is, will the evidence be available to support informed decision-making)?

Proportional effort and resources

Is an evaluation required, or is robust performance monitoring sufficient for program assurance?

If an evaluation is required, is there a common understanding of what needs to be evaluated, and why?

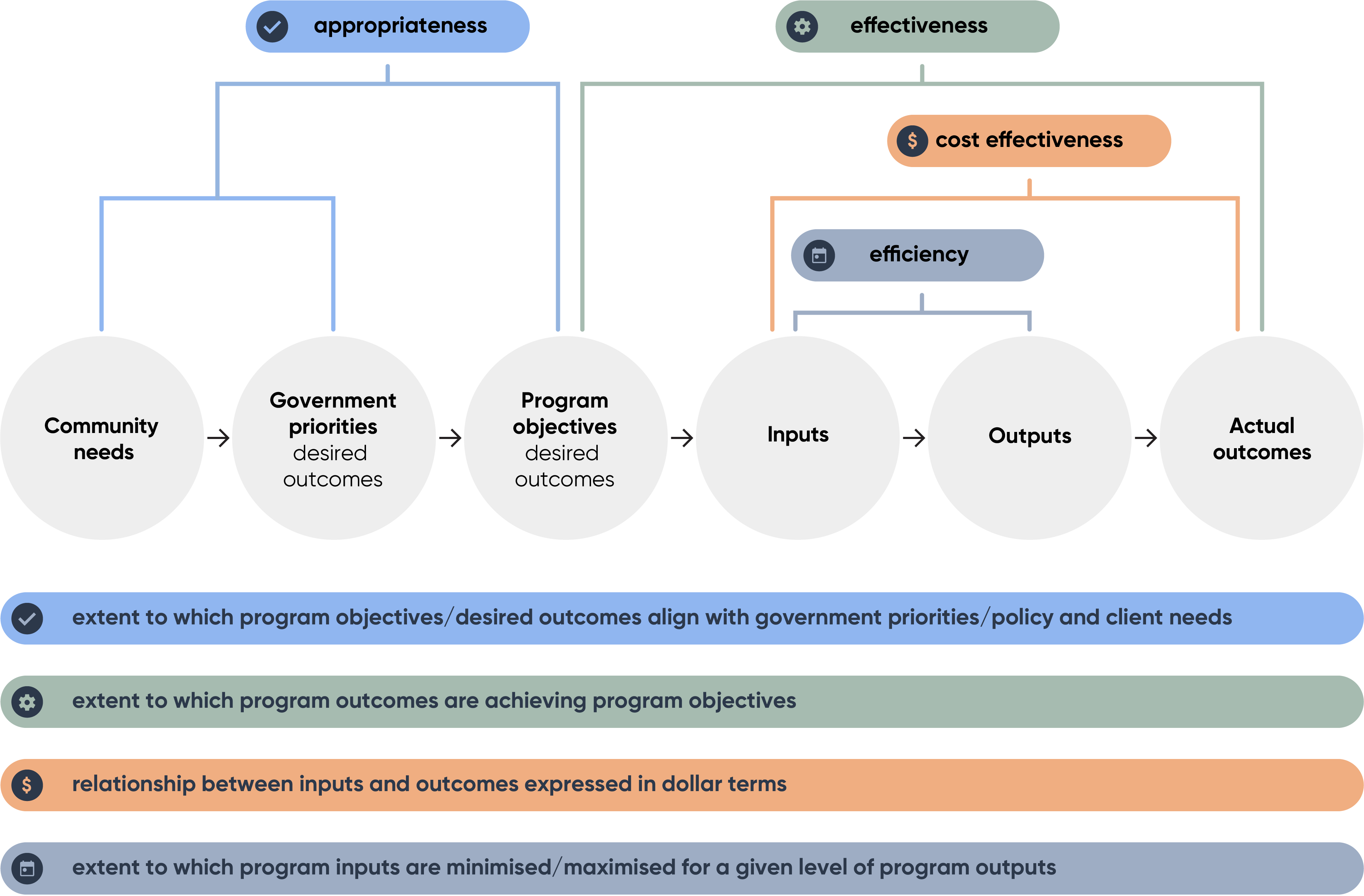

Evaluations often focus on assessing the appropriateness, efficiency and/or effectiveness (and the related dimension of “cost-effectiveness”) of a government program or activity. Determining which aspects will be evaluated for a specific program or activity will help to define scope and potential costs.

Key question: Is the scale of effort and resources allocated to an evaluation proportional to the value, impact, strategic importance and risk profile of the program or activity?

Consider the cycle

When will evidence be available to demonstrate program effectiveness and/or support future decision-making?

The focus of evaluation will vary depending on where a program/activity is in the policy cycle – see below.

Before implementation

Key questions: Is there evidence to substantiate the problem/need being addressed? Will it work? To what extent is the need being met? What can be done to address this need? What is the current state?

During implementation

Key questions: Is it working? Is the program or activity operating as planned? What can be learned?

After a program or activity is in operation for some time

Key questions: Did it work? Is the program or activity achieving its objectives? What has the impact been? What are the benefits relative to the costs?

Rapid evaluation to support urgent decision-making

Key questions: What evidence is available? What action is required? What caveats do I need to put on the level of support for this proposal? What comebacks might provide assurance at a later point?

For more information see When to evaluate

Principles‑based approach

Are those responsible for the delivery of results satisfied that robust, fit‑for‑purpose evaluation and performance monitoring plans are in place to measure and assess success over time?

The Commonwealth Evaluation Policy establishes a principles‑based approach for the conduct of evaluations across the Commonwealth. This empowers those responsible for the successful delivery of results to determine fit for purpose evaluation plans for specific programs delivered in specific contexts.

This recognises that:

- It is not feasible, cost effective or appropriate to fully evaluate all government programs

- The cost of evaluation must be balanced against the risk of not evaluating, noting that sometimes robust performance monitoring by itself will be sufficient to meet the performance reporting requirements under the Public Governance, Performance and Accountability Act 2013

- The appropriate timing and type of evaluation required to support decision making, continuous improvement and accountability needs to be determined on a case‑by‑case basis to ensure the overall approach is fit for purpose

- In some cases, well‑designed data collection and performance monitoring established during the design phase of a new or amended program or activity can help to refine it over time. In other cases, evaluation will need to be more comprehensive to assess whether an activity or program is appropriate, effective and/or efficient.

Irrespective of whether a formal evaluation is deemed necessary, all programs and activities must be “fit to evaluate”.

This means your evaluation plan must ensure that robust data and evidence is collected throughout implementation to allow the effectiveness and efficiency of programs to be measured and assessed over time.