The image shows different, but complementary, evidence‑generating activities that help Commonwealth entities and companies to meet high standards of governance, performance and accountability.

Many share similar approaches and analytical techniques, and together, represent the fundamental links in an accountability and assurance chain that is designed to contribute to continuous improvement and better public administration, and to identify better practice approaches.

In this context, evaluation is one way of generating evidence to measure and assess the extent to which government programs and activities are achieving their objectives and outcomes.

Along with other evidence‑generating activities, evaluation supports Commonwealth entities and companies to meet their obligations under the Public Governance, Performance and Accountability Act 2013 (PGPA Act), associated instruments, and related whole‑of‑government frameworks and policies.

Evaluation

Evaluation is the systematic and objective assessment of the design, implementation or results of a government program or activity for the purposes of continuous improvement, accountability and decision‑making.

Evaluations are typically designed to improve the performance of government programs and activities, assess their effects and impacts, and inform decisions about future policy development.

Evaluations can use theory of change and logic models as part of their design to describe how and why a desired change is expected to happen in a particular context. They are a tool that helps link what you want to achieve with how you will measure success.

It is expected that a strategic, risk‑based approach will be used to identify and prioritise when an evaluation is required to support or complement routine performance monitoring and reporting.

Research

A broad range of research can inform evaluation. You can conduct research for different reasons.

For example, pure research often goes beyond identified problems, responses, and issues of scalability, and focuses instead on informing longer‑term policy development, modelling and investigation.[1]

Relationship between research and evaluation

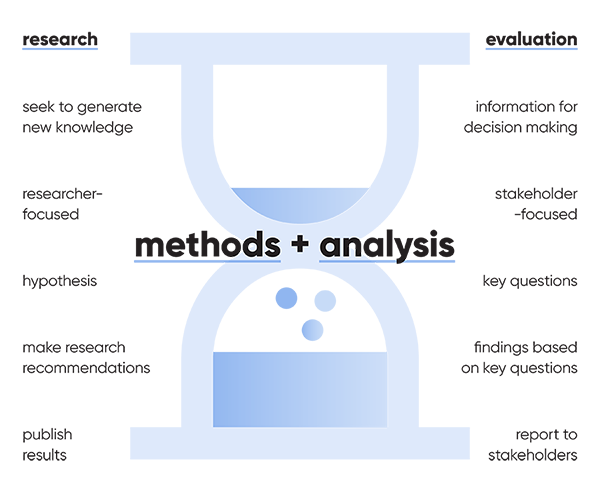

The relationship between research and evaluation prompts much academic discussion.

From a practical point of view:

- evaluation is typically interested in generating specific, applied knowledge to address key questions, and is controlled by those funding or commissioning the evaluation

- research typically produces more general knowledge, can be more theoretical, and is usually controlled by the researchers.[2]

The role of research and evaluation in a Commonwealth context is shown in the image below.

Performance measurement and monitoring

Performance measurement is a continuous process of collecting and analysing data to compare how well a project, program, or policy is being implemented against expected results.

The related concept of performance monitoring involves performing and analysing routine measurements to detect changes.

As with evaluation, program logic can be used to assist in the design of performance management frameworks.

Performance measurement and monitoring is used to:

- inform managers about the progress of ongoing activities

- detect problems that may be addressed through corrective actions

- provide information to support accountabilities and reporting obligations under the PGPA Act and associated instruments

- contribute to an evidence base for future evaluations or reviews, particularly in helping develop a program logic.

The Public Governance, Performance and Accountability Rule 2014 establishes legislative requirements to measure and assess performance, and establishes the characteristics of good performance measures.

For more information, visit PGPA legislation, associated instruments and policies.

Quality improvement

Quality improvement is a systematic, formal approach to the analysis of performance and efforts to improve performance.[3]

It is commonly used in the health care sector to systematically improve the way care is delivered to patients, to make changes that support better health outcomes and to improve system performance.

A number of approaches exist to help collect and analyse performance data and test change. Some examples are quality improvement models, business process improvement methodologies, or continuous improvement.

These techniques are generally designed to improve internal processes, practices, costs or productivity for specific programs and activities assessed against a set of standards.

Both evaluation and quality improvement processes are often designed to bring about improvements in delivery or system performance.

Evaluation can be used to support continuous improvement approaches by helping to identify and clarify specific aspects of internal processes, performance or outcomes that require attention.

Audit

Audits typically involve an independent examination of the financial and non‑financial performance information of a Commonwealth entity or company. It provides assurance and identifies areas where public administration can be improved.

Arrangements across the Commonwealth

Audit arrangements are in place across the Commonwealth to support accountability and transparency. It drives performance improvements in the public sector through:

- independent reporting to the Parliament (see role of the Auditor-General and the Australian National Audit Office)

- independent advice to accountable authorities of Commonwealth entities (see RMG-202 Audit Committees)

- requirements for Commonwealth companies to produce an auditor's report as part of its annual report (see RMG‑137 Annual reports for Commonwealth companies).

Audit and evaluation differences

Both evaluation and performance audits provide an opportunity for a holistic review of a government program or activity.

They share some approaches and analytic techniques and provide input to decision‑makers that helps to improve performance and identify better practice.

A key difference relates to scope:

- evaluations can assess the effectiveness, efficiency or appropriateness of a government program or activity, including both policy and administrative matters, across the policy cycle.

- performance audits involve the independent and objective assessment of all or part of an entity’s operations and administrative support systems – they do not extend to assessing the policy merit of a program or activity.

For more information on the legislative and policy requirements that apply to Commonwealth entities for evaluation, see Why evaluate.

[1] Appendix B: Evaluation in the Australian Public Service: current state of play, some issues and future directions (Australia & New Zealand School of Government)

[2] Ways of framing the difference between research and evaluation (Better Evaluation)

[3] Basics of Quality Improvement (American Academy of Family Physicians)